Local LLM Deployment & Data Sovereignty: The Strategic Imperative for Saudi Enterprises

In the rapidly evolving digital landscape of the Kingdom of Saudi Arabia, the intersection of Artificial Intelligence and Data Sovereignty has become the critical battleground for enterprise success. As we accelerate towards Vision 2030, C-Level executives in Riyadh, Jeddah, and NEOM are facing a pivotal decision: how to harness the immense power of Generative AI without compromising the sanctity, security, and legal compliance of their corporate data. At iitwares.com, we believe the answer lies in the robust deployment of Local Large Language Models (LLMs).

The Urgency of Data Sovereignty in the Kingdom

Data is the new oil, but unlike oil, it is governed by increasingly strict digital borders. The Saudi National Data Management Office (NDMO) and the Personal Data Protection Law (PDPL) have established clear frameworks requiring sensitive national and corporate data to remain within the Kingdom’s geographical boundaries. Public AI models, such as standard ChatGPT or Claude instances, often process data on servers located in the US or Europe. This presents a massive compliance risk.

For Saudi enterprises, Local LLM deployment is not just a technical preference; it is a regulatory necessity. By hosting AI models on-premise or within a sovereign Saudi cloud, organizations ensure that no byte of sensitive data crosses international borders. This aligns perfectly with the national mandate to build a self-sufficient digital economy.

Risks of Public Cloud AI for Saudi Corporations

- Data Leakage: Proprietary algorithms, financial forecasts, and citizen data can inadvertently be used to train public models, exposing trade secrets.

- Latency Issues: Round-tripping data to Western servers introduces latency that is unacceptable for real-time applications in sectors like Fintech and Oil & Gas.

- Regulatory Non-Compliance: Storing strict “Level 3” or “Level 4” classified data on foreign soil is a direct violation of KSA regulations.

Strategic Advantages of Local LLM Deployment

Deploying a Private GPT or a fine-tuned open-source model (like Llama 3, Mistral, or Falcon) within your own infrastructure transforms AI from a liability into a sovereign asset. iitwares.com specializes in architecting these environments to ensure maximum ROI and security.

1. Uncompromised Security and Privacy

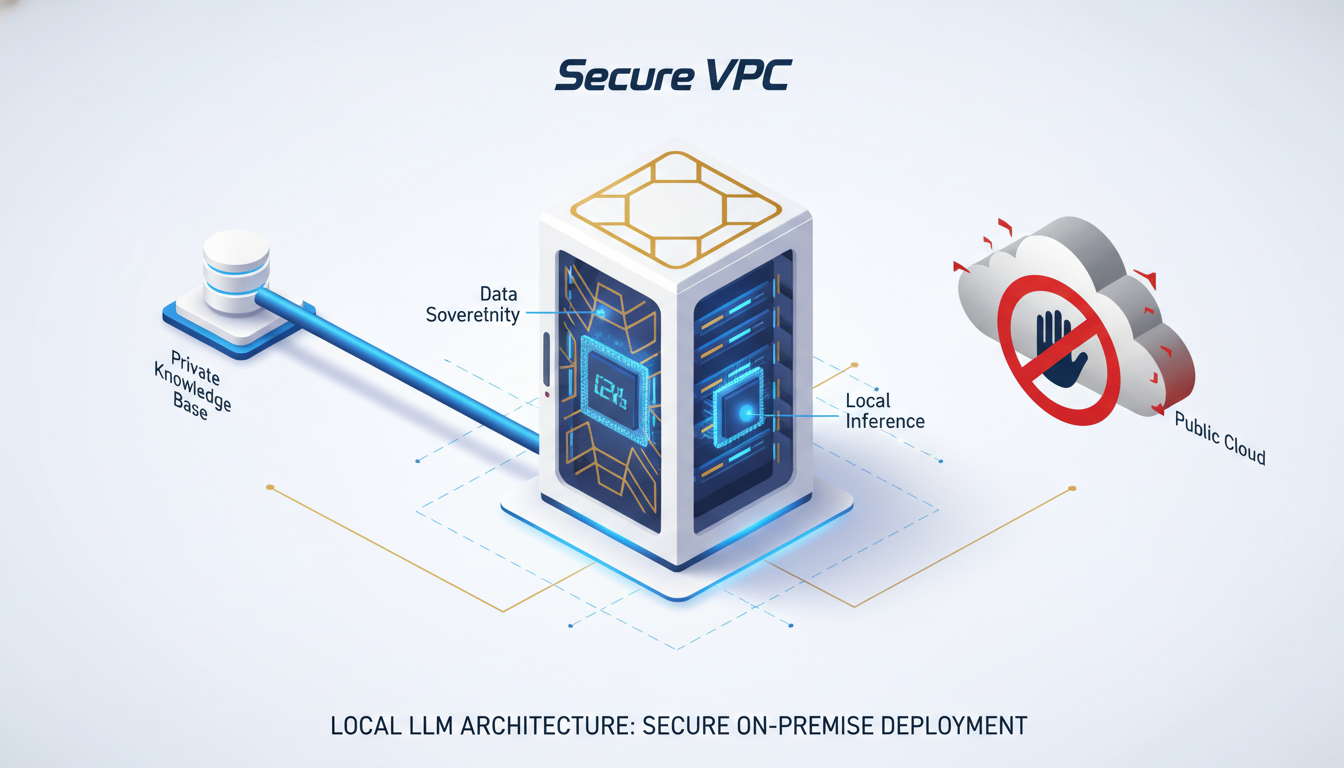

With a local deployment, your AI operates in an air-gapped or secured VPC environment. This means your Enterprise Resource Planning (ERP) data, customer records, and internal communications never leave your control. You own the model, the weights, and the inference logs.

2. Customization and Arabic Language Superiority

Generic global models are generalized jack-of-all-trades. By implementing Local LLMs, we can fine-tune models specifically on your industry data and, crucially, on Saudi Arabic dialects and business context. This AI Engine Optimization (AEO) ensures that the output is culturally relevant and technically accurate for the Saudi market.

3. Cost Control and ROI

While the initial CapEx for GPU infrastructure (like NVIDIA H100s) or sovereign cloud reservation is higher, the OpEx of running a Local LLM is significantly lower at scale compared to token-based pricing of public APIs. For high-volume transaction processing typical in Saudi banking and government sectors, the ROI becomes positive within 12-18 months.

Implementing Local AI with iitwares.com

At iitwares.com, we follow a rigorous methodology to deploy sovereign AI for our clients:

Phase 1: Infrastructure Assessment

We evaluate your current data center capabilities to host high-performance computing clusters or assist in procuring space within certified Saudi data centers (e.g., STC, Mobily) that meet NDMO standards.

Phase 2: Model Selection and Fine-Tuning

We select the optimal open-weights model—such as the UAE’s Falcon or Meta’s Llama 3—and perform Parameter-Efficient Fine-Tuning (PEFT) using your proprietary data. This creates a “Corporate Brain” that knows your business inside out.

Phase 3: RAG Integration

We implement Retrieval-Augmented Generation (RAG) systems. This allows your Local LLM to query your live internal databases (SQL, Vector Stores) to provide accurate, citation-backed answers without hallucinating, ensuring trusted decision intelligence.

The Future of AI in NEOM and Beyond

As NEOM builds the world’s first cognitive city, the demand for edge-deployed AI will skyrocket. Local LLMs running on edge devices will power everything from autonomous transport to personalized municipal services, all while maintaining strict privacy standards.

iitwares.com is uniquely positioned to guide Saudi enterprises through this transition. We do not just build software; we build sovereign digital intelligence. By prioritizing Data Sovereignty and Local LLM architecture, we empower Saudi businesses to lead the global AI revolution without compromising their values or security.

Contact iitwares.com today to schedule a consultation on securing your AI future and ensuring full alignment with Vision 2030.